The Brick Wall

With reading as my primary skill of focus in learning Chinese, a large part of my study is acquiring new words. Some vocabulary is from general word lists such as the HSK, while much of it is tied to a specific text I am reading, in order to increase my level of comprehension. While many approach the task of reading in a foreign language by looking up unknown words as they are encountered, I prefer to learn them ahead of time, to avoid the break in concentration while reading. With my bad habit of perfectionism, my main strategy in the past for learning these word has been the “Brick Wall Method”:

The Brick Wall Method – Learn every unknown word you encounter, no matter how difficult or rare it is

My theory has been — like being a brick wall against a tennis player — to not let any unknown word get past me, so that eventually I will run out of unknown words and thus will have learned the language. If a word is used in a text, it’s clearly important to some nominal degree, and if it’s used once, then it’s more likely to be seen again at some point, versus all the words that aren’t in the text.

This clearly leads to large quantities of words to be learned. For news articles or short essays of around 1,000 words or so, the list is manageable. However, as I progress from short essays to reading full novels, the amount of vocabulary is huge. For the book I am currently reading, 《中国的逻辑》, I have been keeping track of the unknown words that I have selected for study. Here is the breakdown of vocabulary for a series of chapters.

| Chapter | Words | Unique words | Newly introduced words | Words for study | % for study |

|---|---|---|---|---|---|

| 2 | 2097 | 869 | 869 | 194 | 22% |

| 3 | 1964 | 945 | 640 | 173 | 27% |

| 4 | 1466 | 702 | 316 | 126 | 40% |

| 5 | 1514 | 751 | 326 | 135 | 41% |

| 6 | 2132 | 879 | 443 | 150 | 34% |

| 7 | 1509 | 717 | 243 | 78 | 32% |

| 8 | 1965 | 813 | 240 | 86 | 36% |

| 9 | 1631 | 671 | 229 | 97 | 42% |

| 10 | 2077 | 936 | 312 | 149 | 48% |

| Total | 16355 | 7283 | 3618 | 1188 | 33% |

For 9 chapters of a book (which constitute 25% of the full text), I have identified nearly 1,200 unfamiliar words, which, according to the Brick Wall Method, should all be learned. Note that for each successive chapter, while the number of new words consistently decreases as the pool of existing words increases, the amount of vocabulary for study does not. A likely explanation is that as the frequent words get introduced in previous chapters, the new words in each successive chapter become increasingly rare, and thus more likely to be unknown.

Results like this show the weakness in the naive assumptions of the Brick Wall Method. The appearance of a rare word is no guarantee that it will be used again in the text, just as today’s winning lottery number is no more likely to win tomorrow than the losing numbers. If we eliminate the words that are only used once in a text, does it cut down the amount of vocabulary to study? Enter the hapax legomena.

Hapax Legomena

A hapax legomenon (from the Greek “something said only once”) is simply a fancy term for a word that only occurs once in a single context — an essay, a book, a large corpus, or an entire language. In a Zipf plot of word frequency vs. frequency rank, the hapax legomena are plotted at the lowest, farthest right end of the graph. The Zipf plot for 《哈利波特与魔法石》 is represented as follows.

The Zipf plot does a poor job of illustrating this class of words. As a normal plot, the range of the y-axis is so large that the frequency=1 words are indistinguishable from the x-axis. As a log-log plot of the same data, the logarithmic scale squeezes all these same words into a tiny subinterval between ticks, having the same horizontal width as the top 2 words.

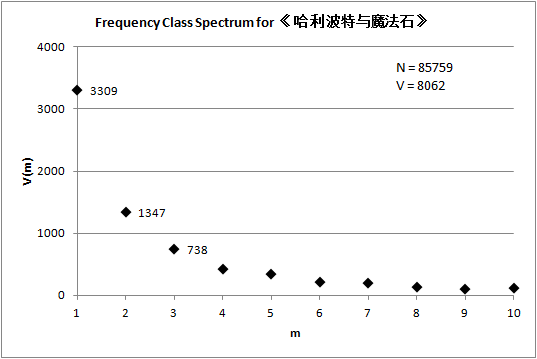

Another way of plotting the word statistics brings the lower frequency words into better focus. The frequency class spectrum plots the number of occurrences, or frequency classes, on the x-axis, and the number of unique words having that many occurrences on the y-axis. To illustrate, below is the frequency spectrum for the first 10 word classes in 《哈利波特》. In this graph, frequency class m=1 represents all the hapax words in the entire novel. Past the edge of the graph, the right-most point at m=4195 has a vocabulary size of 1; in other words,的 is the only word used 4,195 times in the text.

What may seem surprising is not only that there are so many words only used once in the text, but that they dominate all the other frequency classes by far. The number of hapax legomena is more than twice the number of dis legomena (words used exactly twice). In 《哈利波特》, hapax legomena comprise 41% or the unique words in the complete novel. This is not something unusual in that particular text. From 1,000-word news articles to large corpora like the Lancaster Corpus of Mandarin Chinese, the frequency spectrum consistently shows the same shape, and the percentage of vocabulary only used once in the text is consistently between 30 to 50 percent.

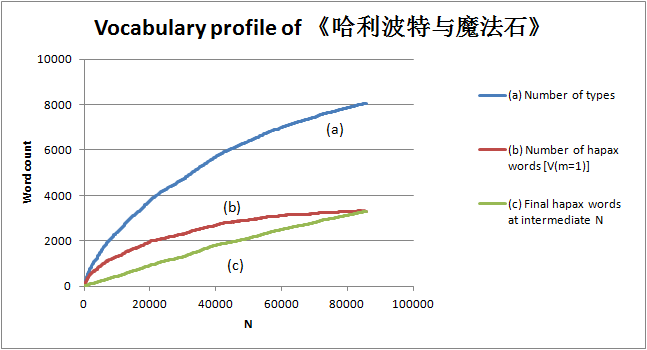

Another way of representing the characteristics of hapax legomena is by plotting a spectral element profile. This plots the absolute count of a frequency class as a function of the running words (N) in the text. For the class m=1, the slope will be 1 at the first data point N=1, since the only word in a text size of one is guaranteed to be used exactly once. If the second word in the text is not a repeat of the first, the slope will continue to be unity, and it will continue to be so until words inevitably begin to repeat. As N increases, the number of words in the m=1 class will oscillate higher and lower as words are reused and new words are introduced, but at a broader scale a smoother curve can be seen. Thus, the spectral profile shows how the size of a particular frequency class develops as the text progresses.

The graph above tracks three different measurements along the development of the text. The top plot (a) represents the number of unique words (types, in linguistic terms) encountered to that point in the text, versus the total number of running words (tokens). Thus, this curve illustrates what is commonly known as the type-token ratio. The rate of increase in vocabulary starts at unity but quickly is reduced, yet doesn’t quite level off to zero. The middle plot (b) represents the total number of hapax legomena at any point in the text. In 《哈利波特》, this number also starts with a slope of unity which quickly drops off, reaching a maximum value of 3,309. In contrast with the type-token curve, the spectral element curve can trend downward in large texts (which it hints at doing in this plot), as the reserve of unused words in the language becomes depleted and the rate of word reuse overtakes new word introduction. Plot (c) shows the number of words of the 3,309 that have been seen once at the end of the text, and the point at which their only occurrence is encountered. The nearly linear growth of the curve illustrates that the introduction of these words are fairly evenly spaced throughout the text, rather than appearing at a particular point in the novel.

And the Winner Is…

From the evidence gathered above, the Brick Wall Method to vocabulary learning has some holes in it. Learning every word you find with the expectation that you will eventually run out of new words to encounter is to play against crushing odds. The type/token curve simply doesn’t flatten out fast enough for this to work. Also, learning rare words with the expectation that they will be reused in the near term is being somewhat optimistic, as around 30-50% of the vocabulary in any text will never be seen again in the same text. Both of these traits are due to the rarity of the majority of the words in a language, or what Harald Baayen (ref. below) calls “large numbers of rare events”, or LNRE.

The approach I now take is as follows. I still attempt to identify all the unknown words in a particular text of around 1,000 to 2,000 words (around the size of a news article or book chapter). However, I make a distinction between words used multiple times in the text versus words used only once, and I create two separate word lists. The list of multiple-occurrence words I will focus more attention on, making sure I understand them well, and potentially making SRS items out of them. The single-occurrence words, however, I will still study as I am reading the text, but with the goal of getting familiar with the words, knowing them just well enough to make the text clearer. The table below illustrates this revised approach as it relates to words in 《中国的逻辑》:

| Chapter | Words | Newly introduced words | Unknown words used >1 time | Unknown words used once |

|---|---|---|---|---|

| 2 | 2097 | 869 | 68 | 126 |

| 3 | 1964 | 640 | 19 | 154 |

| 4 | 1466 | 316 | 19 | 107 |

| 5 | 1514 | 326 | 22 | 113 |

| 6 | 2132 | 443 | 34 | 116 |

| 7 | 1509 | 243 | 15 | 63 |

| 8 | 1965 | 240 | 11 | 75 |

| 9 | 1631 | 229 | 18 | 79 |

| 10 | 2077 | 312 | 22 | 127 |

| Total | 16355 | 3618 | 228 | 960 |

This approach has made my vocabulary study much more manageable, as the multiply-occurring words make much smaller sets. It’s true that by partitioning words based on the number of times they are seen in a chapter I am ignoring other factors, such as its frequency in Chinese in general, or whether it’s an important keyword for comprehending a passage despite its sole occurrence. The first of these factors is of low importance to me, since my primary aim at the moment is reading for pleasure, and not large-scale vocabulary acquisition. For the second of these points, hapax words sometimes can be important to understanding a passage, and I can’t avoid dictionary lookups entirely.

Even if you’re not a word freak like me, it’s still useful to be aware of word distributions, and the high probability of hapax legomena in whatever you happen to be reading.

References

Baayen, R. Harald. Word Frequency Distributions. Dordrecht ;: Kluwer Academic, c2001. This book examines statistical methods for analyzing word frequencies, and investigates many ways of modeling their phenomena. The kind of charting demonstrated in this post is just a small example of the kind of analysis that can be found.