By 2008, I had been studying Chinese off and on for around 3 years. As a self-learner, my study was rather eclectic: Pimsleur, Chinesepod, and random flash card lists were my main methods. I was far from fluent, still struggling to understand all but the simplest news articles, fiction, or blog posts. But I felt like I did know a lot of words, I just didn’t know how many. How much longer before this would start to get easy? So I undertook a self-examination to estimate how many Chinese words I actually knew.

The method I used was similar to the level tests of Paul Nation or Paul Meara. In both of their investigations on evaluating the English knowledge of ESL students, they had split a list of the top few thousand word families into bands of 1,000 consecutive word ranks, ordered by frequency in the language. Then, sample words from each band were tested, and the percentage of words known in the sample was extrapolated to the size of the original band. A bar chart plotted from these results represents a word knowledge profile for a particular test subject. The sum of the bar areas should represent an estimate of the person’s known words (as a lower bound, since the base word list is only a few thousand words out of the whole language). However, the number alone is not highly useful unless it can be tied to more meaningful measures of fluency, or can be taken on separate occasions under the same conditions to measure the subject’s progress.

Typical profile for an EFL student (Meara, 1992; Milton, 2009)

First tests – 2008

My first foray into estimates of known Chinese words was in early 2008. I used a test similar to that described above, with a few modifications:

word source

My source of Chinese words was based on word counts from the Lancaster Corpus of Mandarin Chinese (LCMC). Earlier, I had converted the individual words from the original XML files into an SQLite3 database, so getting a word frequency list was just the matter of a simple query. From the original data, I filtered the tokens as follows:

- include only token type “w” (ignore punctuation)

- exclude parts of speech “nr” (personal first and last names) and “nx” (ASCII, symbols, and other non-Chinese symbols)

- exclude parts of speech “t” (time words) and “m” (numerals) when it contains just numbers or numbers with 第, 月, or 年

- exclude certain non-fiction corpus categories A, B, C (press writings), D (Religion), and H (Miscellaneous reports and official documents)

The last of these criteria was to limit the influence of specialized and ephemeral words; the LCMC press data is mostly from 1990-1991, resulting in words like 油田 (oil field) and 苏联 (Soviet Union) being unusually frequent in the corpus as a whole.

word frequency range

Rather than use bands of consecutive 1,000 word ranks, I experimented with different splits and word ranges, to examine the effect on results. In one trial, I tested myself on all of the top 1,000 words, split into bands of 200 words. The testing process was quite tedious, so it was not repeated. In another trial, I used a sample of 100 words per band, with bands of varying size, starting with band 1 of ranks 1-1,000, gradually increasing until the last band of words for ranks 8,000-10,000. Using a small sample size meant getting a broader picture of word knowledge of rarer words, sacrificing some accuracy in order to reduce the testing burden. In a third trial, I made even more bold increases in the ranges tested for the same sample size, still starting with band 1 or ranks 1-1,000, but with a final band containing words ranked 18,001 to 34507 (the number of words in the full data set).

word testing method

The way I tested each word was simply to import the sample data into a flashcard program, with each frequency band as a separate flashcard set, and then to review each word in turn, marking each word as known or unknown. The import data was generated using a quick program I wrote, which chose random samples in each band, and then looked up their pinyin and English definition in my local copy of the CC-CEDICT dictionary. My criteria for judging whether I knew a word was whether I could see the Chinese characters and recall its pinyin and basic English definition within a few seconds. In most cases, it was obvious when I knew or didn’t know a word. However, in 5-10 cases, there were words that were on the tip of my tongue, but I just couldn’t recall the meanings until seeing the correct answer. I chose to put these words to the back of the queue, giving me a second chance to get it right. It was always clear after a fresh re-trial whether I did, in fact, know the word and couldn’t recall at the earlier moment. the ones I couldn’t recall immediately on the second try were marked unknown at that point. It was a slight cheat, but I rationalized it as being from the fatigue of testing so many words, coupled with the fact that I would have easily known the words in the context of a sentence.

The results

January, 2008 – The 1,000 word test

| band | number correct | % correct |

|---|---|---|

| 0-200 | 176 | 88 |

| 201-400 | 162 | 81 |

| 401-600 | 109 | 54.5 |

| 601-800 | 94 | 47 |

| 801-1000 | 88 | 44 |

In this first trial, I tested all the words ranked 1 to 1,000 in batches of 200. Since the sample size was 100%, there isn’t really a margin of error. Thus, by adding the areas of the bars, I can say that at that time I knew 629 words out of the top 1,000 ranks.

January 8, 2008 – The 10,000 word test

| band | number correct (100 samples) |

estim. known | % correct |

|---|---|---|---|

| 1 – 1000 | 67 | 670 | 67% |

| 1001 – 2000 | 39 | 390 | 39% |

| 2001 – 3500 | 18 | 270 | 18% |

| 3501 – 6000 | 21 | 525 | 21% |

| 6001 – 10000 | 12 | 480 | 12% |

This trial was done just a few days after the previous one, but used a larger range of words. I tested a sample of 100 words over all frequency bands.

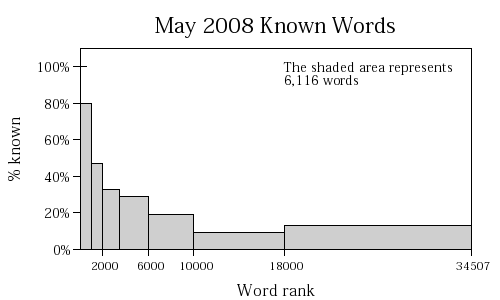

May 2008 – The 34,000 word test

| band | number correct (100 samples) |

estim. known | % correct |

|---|---|---|---|

| 1 – 1000 | 80 | 800 | 80% |

| 1001 – 2000 | 47 | 470 | 47% |

| 2001 – 3500 | 33 | 495 | 33% |

| 3501 – 6000 | 29 | 725 | 29% |

| 6001 – 10000 | 19 | 760 | 19% |

| 10001 – 18000 | 9 | 720 | 9% |

| 18001 – 34507 | 13 | 2146 | 13% |

This trial was done a few months later, and used an even larger range of words, the full set of words from the Lancaster Corpus. The sample size here was also 100 words over all frequency bands.

Analysis

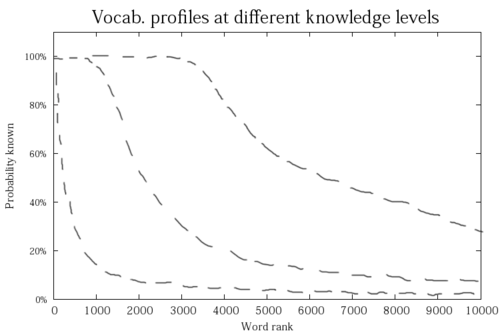

Despite the varying test conditions, these plots all show the same general shape: there is a high percentage of the top 1,000 words that are known, but this quickly drops off to a low level. The relationship between knowing a word and its frequency has been noted for a long time (e.g., Palmer, 1917, p. 123). Word frequencies roughly follow Zipf’s Law, which also has a similar shape, and it’s tempting to assume these vocabulary profiles also follow Zipf’s Law. To see why they don’t, consider the case of a learner who knew many more words than I do. He would not only know up to 100% of the top 1,000 words, but probably near 100% of the next 1,000 words or more. His vocabulary profile wouldn’t slope downward as quickly as mine, but instead would show a kind of “shelf” before dipping and leveling off with very rare words. For a native speaker, the shelf would extend quite far before dropping off.

Not knowing what the results would look like beforehand, all three of these trials can be considered a preliminary working out of methods. This is why I varied the ranges and band sizes for each run, as I was trying to see the general shape of the full profile without sacrificing too much in accuracy. Vocabulary profiles in existing research have concentrated on around the top 4,000 word families or so in English. Thus, these results show new information: the same association between word frequency and probability of knowing the word seems to continue even to the rarest of words.

Even with the variations among test runs, I can still make some comparisons with equivalent frequency ranges. For the top 1,000 ranked words, it seems I knew around 630 to 670 in January, rising to 800 in May. For the top 10,000 words, I knew around 2,300 in January and 3,250 in May. However, these results are based on a sample size of 500 over the 10,000 word set. How large is the standard deviation for these samples? Is this sample size large enough to say that there is a significat difference between January and May? This requires further investigation.

As for the question “how many words did I know in 2008”, there is another aspect of these plots that adds some difficulty. Adding up the results from May, it seems the answer is around 6,100 words. But note in the plot that even at the tail end of the rarest words in the LCMC, I still seemed to have a 10% chance of knowing a word. I’ll write more on that later, but some of this may be due to words that I’ve never seen or studied before but are so easily guessed that they are considered “known”: compound words, transliterations from English, proper names, etc. If this is occurring, then what would the result be if I had used a word list from a larger corpus, and tested a frequency band from word ranks 50,000 to 100,000? If this ~10% baseline “knowledge” extends here, then this would add another 5,000 claimed words to my known word count. Thus, when using this profiling method to gauge one’s word knowledge, it’s important to consider what range of words this quantity applies to.

2 Comments to 'Counting Known Chinese Words – Part II'

February 6, 2011

Amazing and extremely fascinating. Do you perhaps still have that program you wrote back in 2008? How many do you know at present?

February 7, 2011

The Perl script I wrote to generate the list is here. This version gets the frequency counts from an SQL database containing the original LCMC data, so it won’t work out of the box. However, it would be trivial to use word rank data from a flat file, or any SQL database containing word plus frequency and rank.

I did do a few more trials after these–once in November 2009, and once in January 2011 using a slightly different method. Using a consistent benchmark of the top 10,000 words, I can compare word counts of 3250 in 2008, 5000 in 2009, and 5400 in 2010. Or, for the top 18,000 words: 3970 in 2008, 7425 in 2009, and 8700 in 2011. The 2011 test covered a larger range of words–over 42,000. Over the whole range, the estimate was 13000 known words! Is 1/3 of my knowledge really in this region of rare words, or is the estimate inflated by a baseline of easily guessed words?

The 2009 data is interesting, because I had spent most of 2009 studying the words from HSK levels 1 through 3 (out of the 4 levels). The 2009 plot shows a distinct bulge around words 2000 to 3000, instead of a smooth decrease. In contrast, I had spent the latter part of 2010 studying characters (to fill out some gaps in my knowledge). Maybe this helped increase the baseline knowledge of “known” rare words to around 19% immediately after this period.